离线安装kubesphere

官网地址

https://kubesphere.io/zh/docs/v3.4/installing-on-linux/introduction/air-gapped-installation/

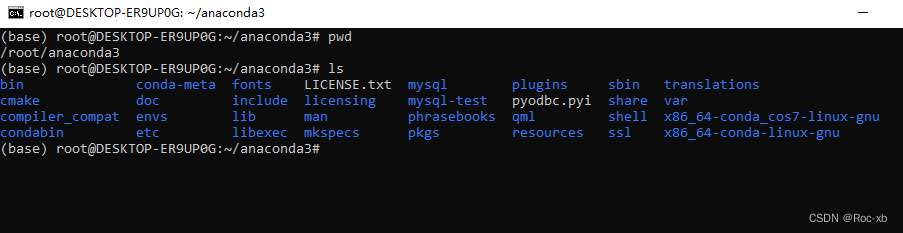

1.先准备docker环境

[root@node1 ~]# tar -xf docker-24.0.6.tgz

[root@node1 ~]# ls

anaconda-ks.cfg calico-v3.26.1.tar docker kk kubekey-v3.0.13-linux-amd64.tar.gz kubesphere.tar.gz manifest-sample.yaml

calicoctl-linux-amd64 config-sample.yaml docker-24.0.6.tgz kubekey kubekey.zip manifest-all.yaml passwd

[root@node1 ~]# cd docker

[root@node1 docker]# ls

containerd containerd-shim-runc-v2 ctr docker dockerd docker-init docker-proxy runc

[root@node1 docker]# cd ..

[root@node1 ~]# ls

anaconda-ks.cfg calico-v3.26.1.tar docker kk kubekey-v3.0.13-linux-amd64.tar.gz kubesphere.tar.gz manifest-sample.yaml

calicoctl-linux-amd64 config-sample.yaml docker-24.0.6.tgz kubekey kubekey.zip manifest-all.yaml passwd

[root@node1 ~]# cp docker/* /usr/bin

[root@node1 ~]# vim /etc/systemd/system/docker.service

[root@node1 ~]# chmod +x /etc/systemd/system/docker.service

[root@node1 ~]# systemctl daemon-reload

[root@node1 ~]# systemctl start docker

[root@node1 ~]# systemctl enable docker

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /etc/systemd/system/docker.service.

[root@node1 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2.配置服务

[root@node1 ~]# vim /etc/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/bin/dockerd

ExecReload=/bin/kill -s HUP $MAINPID

LimitNODFILE=infinity

LimitNPROC=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

3.安装docker-compose

将文件放到/usr/local/bin

[root@node1 bin]# chmod +x /usr/local/bin/docker-compose

然后创建软链接

[root@node1 bin]# ln -s /usr/local/bin/docker-compose /usr/bin/docker-compose

验证服务

[root@node1 bin]# docker-compose --version

Docker Compose version v2.2.2

4.安装harbor

注释harbor可以不安装

解压文件

tar -zxvf harbor-offline-installer-v2.5.3.tgz

备份配置文件

cp harbor.yml.tmpl harbor.yml

vi harbor.yml

需要注释https的配置

hostname: 192.168.31.41

http:

port: 80

harbor_admin_password: Harbor12345

database:

password: root123

max_idle_conns: 100

max_open_conns: 900

data_volume: /root/harbor/data

trivy:

ignore_unfixed: false

skip_update: false

offline_scan: false

insecure: false

jobservice:

max_job_workers: 10

notification:

webhook_job_max_retry: 10

chart:

absolute_url: disabled

log:

level: info

local:

rotate_count: 50

rotate_size: 200M

location: /var/log/harbor

_version: 2.5.0

proxy:

http_proxy:

https_proxy:

no_proxy:

components:

- core

- jobservice

- trivy

upload_purging:

enabled: true

age: 168h

interval: 24h

dryrun: false

安装操作

[root@node1 harbor]# ./install.sh

[Step 0]: checking if docker is installed ...

Note: docker version: 24.0.6

[Step 1]: checking docker-compose is installed ...

Note: docker-compose version: 2.2.2

[Step 2]: loading Harbor images ...

Loaded image: goharbor/harbor-portal:v2.5.3

Loaded image: goharbor/harbor-core:v2.5.3

Loaded image: goharbor/redis-photon:v2.5.3

Loaded image: goharbor/prepare:v2.5.3

Loaded image: goharbor/harbor-db:v2.5.3

Loaded image: goharbor/chartmuseum-photon:v2.5.3

Loaded image: goharbor/harbor-jobservice:v2.5.3

Loaded image: goharbor/harbor-registryctl:v2.5.3

Loaded image: goharbor/nginx-photon:v2.5.3

Loaded image: goharbor/notary-signer-photon:v2.5.3

Loaded image: goharbor/harbor-log:v2.5.3

Loaded image: goharbor/harbor-exporter:v2.5.3

Loaded image: goharbor/registry-photon:v2.5.3

Loaded image: goharbor/notary-server-photon:v2.5.3

Loaded image: goharbor/trivy-adapter-photon:v2.5.3

[Step 3]: preparing environment ...

[Step 4]: preparing harbor configs ...

prepare base dir is set to /root/harbor

WARNING:root:WARNING: HTTP protocol is insecure. Harbor will deprecate http protocol in the future. Please make sure to upgrade to https

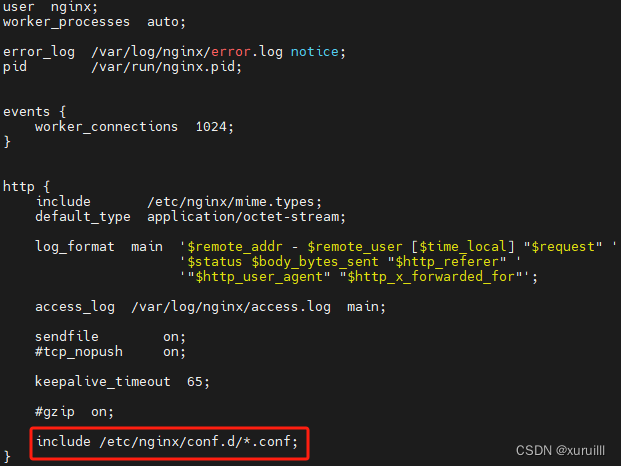

Clearing the configuration file: /config/portal/nginx.conf

Clearing the configuration file: /config/log/logrotate.conf

Clearing the configuration file: /config/log/rsyslog_docker.conf

Clearing the configuration file: /config/nginx/nginx.conf

Clearing the configuration file: /config/core/env

Clearing the configuration file: /config/core/app.conf

Clearing the configuration file: /config/registry/passwd

Clearing the configuration file: /config/registry/config.yml

Clearing the configuration file: /config/registry/root.crt

Clearing the configuration file: /config/registryctl/env

Clearing the configuration file: /config/registryctl/config.yml

Clearing the configuration file: /config/db/env

Clearing the configuration file: /config/jobservice/env

Clearing the configuration file: /config/jobservice/config.yml

Generated configuration file: /config/portal/nginx.conf

Generated configuration file: /config/log/logrotate.conf

Generated configuration file: /config/log/rsyslog_docker.conf

Generated configuration file: /config/nginx/nginx.conf

Generated configuration file: /config/core/env

Generated configuration file: /config/core/app.conf

Generated configuration file: /config/registry/config.yml

Generated configuration file: /config/registryctl/env

Generated configuration file: /config/registryctl/config.yml

Generated configuration file: /config/db/env

Generated configuration file: /config/jobservice/env

Generated configuration file: /config/jobservice/config.yml

loaded secret from file: /data/secret/keys/secretkey

Generated configuration file: /compose_location/docker-compose.yml

Clean up the input dir

Note: stopping existing Harbor instance ...

[+] Running 10/10

⠿ Container harbor-jobservice Removed 0.7s

⠿ Container registryctl Removed 10.1s

⠿ Container nginx Removed 0.0s

⠿ Container harbor-portal Removed 0.7s

⠿ Container harbor-core Removed 0.2s

⠿ Container registry Removed 0.3s

⠿ Container redis Removed 0.4s

⠿ Container harbor-db Removed 0.4s

⠿ Container harbor-log Removed 10.1s

⠿ Network harbor_harbor Removed 0.0s

[Step 5]: starting Harbor ...

[+] Running 10/10

⠿ Network harbor_harbor Created 0.0s

⠿ Container harbor-log Started 0.4s

⠿ Container harbor-portal Started 1.6s

⠿ Container redis Started 1.7s

⠿ Container registryctl Started 1.5s

⠿ Container registry Started 1.6s

⠿ Container harbor-db Started 1.7s

⠿ Container harbor-core Started 2.4s

⠿ Container nginx Started 3.3s

⠿ Container harbor-jobservice Started 3.2s

✔ ----Harbor has been installed and started successfully.----

查看对应的服务

[root@node1 harbor]# docker-compose ps

NAME COMMAND SERVICE STATUS PORTS

harbor-core "/harbor/entrypoint.…" core running (healthy)

harbor-db "/docker-entrypoint.…" postgresql running (healthy)

harbor-jobservice "/harbor/entrypoint.…" jobservice running (healthy)

harbor-log "/bin/sh -c /usr/loc…" log running (healthy) 127.0.0.1:1514->10514/tcp

harbor-portal "nginx -g 'daemon of…" portal running (healthy)

nginx "nginx -g 'daemon of…" proxy running (healthy) 0.0.0.0:80->8080/tcp, :::80->8080/tcp

redis "redis-server /etc/r…" redis running (healthy)

registry "/home/harbor/entryp…" registry running (healthy)

registryctl "/home/harbor/start.…" registryctl running (healthy)

5.初始化服务报错

[root@node1 ~]# ./kk init registry -f config-sample.yaml -a kubesphere.tar.gz

_ __ _ _ __

| | / / | | | | / /

| |/ / _ _| |__ ___| |/ / ___ _ _

| \| | | | '_ \ / _ \ \ / _ \ | | |

| |\ \ |_| | |_) | __/ |\ \ __/ |_| |

\_| \_/\__,_|_.__/ \___\_| \_/\___|\__, |

__/ |

|___/

16:10:02 CST [GreetingsModule] Greetings

16:10:32 CST failed: [node1]

error: Pipeline[InitRegistryPipeline] execute failed: Module[GreetingsModule] exec failed:

failed: [node1] execute task timeout, Timeout=30s

解决报错

[root@node1 ~]# vi /etc/hosts

追加地址

10.1.1.2 node1.cluster.local node1

10.1.1.2 dockerhub.kubekey.local

10.1.1.2 lb.kubesphere.local

改为自己电脑地址

[root@node1 ~]# sed -i 's/10.1.1.2/192.168.31.41/g' /etc/hosts

重新执行初始化命令

[root@node1 ~]# ./kk init registry -f config-sample.yaml -a kubesphere.tar.gz

_ __ _ _ __

| | / / | | | | / /

| |/ / _ _| |__ ___| |/ / ___ _ _

| \| | | | '_ \ / _ \ \ / _ \ | | |

| |\ \ |_| | |_) | __/ |\ \ __/ |_| |

\_| \_/\__,_|_.__/ \___\_| \_/\___|\__, |

__/ |

|___/

16:14:04 CST [GreetingsModule] Greetings

16:14:04 CST message: [node1]

Greetings, KubeKey!

16:14:04 CST success: [node1]

16:14:04 CST [UnArchiveArtifactModule] Check the KubeKey artifact md5 value

16:14:04 CST success: [LocalHost]

16:14:04 CST [UnArchiveArtifactModule] UnArchive the KubeKey artifact

/root/kubekey/cni/amd64/calicoctl

/root/kubekey/cni/v1.2.0/amd64/cni-plugins-linux-amd64-v1.2.0.tgz

/root/kubekey/cni/v3.26.1/amd64/calicoctl

/root/kubekey/crictl/v1.24.0/amd64/crictl-v1.24.0-linux-amd64.tar.gz

/root/kubekey/docker/24.0.6/amd64/docker-24.0.6.tgz

/root/kubekey/etcd/v3.4.13/amd64/etcd-v3.4.13-linux-amd64.tar.gz

/root/kubekey/helm/v3.9.0/amd64/helm

/root/kubekey/images/blobs/sha256/019d8da33d911d9baabe58ad63dea2107ed15115cca0fc27fc0f627e82a695c1

----

/root/kubekey/images/index.json

/root/kubekey/images/oci-layout

/root/kubekey/kube/v1.22.12/amd64/kubeadm

/root/kubekey/kube/v1.22.12/amd64/kubectl

/root/kubekey/kube/v1.22.12/amd64/kubelet

16:16:03 CST success: [LocalHost]

16:16:03 CST [UnArchiveArtifactModule] Create the KubeKey artifact Md5 file

16:16:33 CST success: [LocalHost]

16:16:33 CST [FATA] [registry] node not found in the roleGroups of the configuration file

报错显示没有对应的仓库的角色组,这个错误通常是指角色组缺少必须的注册节点registry node的定义

vi config-sample.yaml

registry:

- node1

缺少这个角色定义

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: node1, address: 192.168.31.41, internalAddress: 192.168.31.41, user: root, password: "123456"}

roleGroups:

etcd:

- node1

control-plane:

- node1

worker:

- node1

registry:

- node1

controlPlaneEndpoint:

## Internal loadbalancer for apiservers

# internalLoadbalancer: haproxy

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.22.12

clusterName: cluster.local

autoRenewCerts: true

containerManager: docker

etcd:

type: kubekey

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

registry:

type: harbor

domain: dockerhub.kubekey.local

tls:

selfSigned: true

certCommonName: dockerhub.kubekey.local

auths:

"dockerhub.kubekey.local":

username: admin

password: Harbor12345

privateRegistry: "dockerhub.kubekey.local"

namespaceOverride: "kubesphereio"

# privateRegistry: ""

# namespaceOverride: ""

registryMirrors: []

insecureRegistries: []

addons: []

继续报错

[root@node1 ~]# ./kk init registry -f config-sample.yaml -a kubesphere.tar.gz

_ __ _ _ __

| | / / | | | | / /

| |/ / _ _| |__ ___| |/ / ___ _ _

| \| | | | '_ \ / _ \ \ / _ \ | | |

| |\ \ |_| | |_) | __/ |\ \ __/ |_| |

\_| \_/\__,_|_.__/ \___\_| \_/\___|\__, |

__/ |

|___/

16:45:43 CST [GreetingsModule] Greetings

16:45:43 CST message: [node1]

Greetings, KubeKey!

16:45:43 CST success: [node1]

16:45:43 CST [UnArchiveArtifactModule] Check the KubeKey artifact md5 value

16:46:05 CST success: [LocalHost]

16:46:05 CST [UnArchiveArtifactModule] UnArchive the KubeKey artifact

16:46:05 CST skipped: [LocalHost]

16:46:05 CST [UnArchiveArtifactModule] Create the KubeKey artifact Md5 file

16:46:05 CST skipped: [LocalHost]

16:46:05 CST [RegistryPackageModule] Download registry package

16:46:05 CST message: [localhost]

downloading amd64 harbor v2.5.3 ...

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- 0:00:19 --:--:-- 0curl: (6) Could not resolve host: kubernetes-release.pek3b.qingstor.com; 未知的错误

16:46:26 CST message: [LocalHost]

Failed to download harbor binary: curl -L -o /root/kubekey/registry/harbor/v2.5.3/amd64/harbor-offline-installer-v2.5.3.tgz https://kubernetes-release.pek3b.qingstor.com/harbor/releases/download/v2.5.3/harbor-offline-installer-v2.5.3.tgz error: exit status 6

16:46:26 CST failed: [LocalHost]

error: Pipeline[InitRegistryPipeline] execute failed: Module[RegistryPackageModule] exec failed:

failed: [LocalHost] [DownloadRegistryPackage] exec failed after 1 retries: Failed to download harbor binary: curl -L -o /root/kubekey/registry/harbor/v2.5.3/amd64/harbor-offline-installer-v2.5.3.tgz https://kubernetes-release.pek3b.qingstor.com/harbor/releases/download/v2.5.3/harbor-offline-installer-v2.5.3.tgz error: exit status 6

报错显示的是对应的下载失败,

这个报错原因是

/root/kubekey/registry/harbor/v2.5.3/amd64/harbor-offline-installer-v2.5.3.tgz对应的包没有

解决办法:把包放到对应路径

执行成功如下

17:28:50 CST [InitRegistryModule] Generate registry Certs

[certs] Generating "ca" certificate and key

[certs] dockerhub.kubekey.local serving cert is signed for DNS names [dockerhub.kubekey.local localhost node1] and IPs [127.0.0.1 ::1 192.168.31.41]

17:28:50 CST success: [LocalHost]

17:28:50 CST [InitRegistryModule] Synchronize certs file

17:28:51 CST success: [node1]

17:28:51 CST [InitRegistryModule] Synchronize certs file to all nodes

17:28:52 CST success: [node1]

17:28:52 CST [InstallRegistryModule] Sync docker binaries

17:28:52 CST skipped: [node1]

17:28:52 CST [InstallRegistryModule] Generate docker service

17:28:52 CST skipped: [node1]

17:28:52 CST [InstallRegistryModule] Generate docker config

17:28:52 CST skipped: [node1]

17:28:52 CST [InstallRegistryModule] Enable docker

17:28:52 CST skipped: [node1]

17:28:52 CST [InstallRegistryModule] Install docker compose

17:28:53 CST success: [node1]

17:28:53 CST [InstallRegistryModule] Sync harbor package

17:29:07 CST success: [node1]

17:29:07 CST [InstallRegistryModule] Generate harbor service

17:29:08 CST success: [node1]

17:29:08 CST [InstallRegistryModule] Generate harbor config

17:29:08 CST success: [node1]

17:29:08 CST [InstallRegistryModule] start harbor

Local image registry created successfully. Address: dockerhub.kubekey.local

17:30:14 CST success: [node1]

17:30:14 CST [ChownWorkerModule] Chown ./kubekey dir

17:30:14 CST success: [LocalHost]

17:30:14 CST Pipeline[InitRegistryPipeline] execute successfully

6.执行安装集群命令

[root@node1 ~]# ./kk create cluster -f config-sample.yaml -a kubesphere.tar.gz

17:33:38 CST [CopyImagesToRegistryModule] Copy images to a private registry from an artifact OCI Path

17:33:38 CST Source: oci:/root/kubekey/images:calico:cni:v3.26.1-amd64

17:33:38 CST Destination: docker://dockerhub.kubekey.local/kubesphereio/cni:v3.26.1-amd64

Getting image source signatures

Getting image source signatures

Getting image source signatures

Getting image source signatures

Getting image source signatures

17:33:38 CST success: [LocalHost]

17:33:38 CST [CopyImagesToRegistryModule] Push multi-arch manifest to private registry

17:33:38 CST message: [LocalHost]

get manifest list failed by module cache

17:33:38 CST failed: [LocalHost]

error: Pipeline[CreateClusterPipeline] execute failed: Module[CopyImagesToRegistryModule] exec failed:

failed: [LocalHost] [PushManifest] exec failed after 1 retries: get manifest list failed by module cache

[root@node1 ~]# sh ./create_project_harbor.sh

字符集问题注意,官网复制的报错已经修改后的

vi create_project_harbor.sh

#!/usr/bin/env bash

url="https://dockerhub.kubekey.local"

user="admin"

passwd="Harbor12345"

harbor_projects=(library

kubesphereio

kubesphere

argoproj

calico

coredns

openebs

csiplugin

minio

mirrorgooglecontainers

osixia

prom

thanosio

jimmidyson

grafana

elastic

istio

jaegertracing

jenkins

weaveworks

openpitrix

joosthofman

nginxdemos

fluent

kubeedge

openpolicyagent

)

for project in "${harbor_projects[@]}"; do

echo "creating $project"

curl -u "${user}:${passwd}" -X POST -H "Content-Type: application/json" "${url}/api/v2.0/projects" -d "{ \"project_name\": \"${project}\", \"public\": true}" -k #curl命令末尾加上 -k

done

因为扩容重启服务器

./kk init registry -f config-sample.yaml -a kubesphere.tar.gz

报错

Error response from daemon: driver failed programming external connectivity on endpoint nginx (2fa557486fa4c826d124bedb59396bdbabbb63437892ea9ff7178562b2b51a9a): Error starting userland proxy: listen tcp4 0.0.0.0:443: bind: address already in use: Process exited with status 1

这个原因是本地有个nginx服务启动导致的

解决办法:停止服务 nginx -s stop

[root@node1 ~]# ./kk create cluster -f config-sample.yaml -a kubesphere.tar.gz

pull image failed: Failed to exec command: sudo -E /bin/bash -c "env PATH=$PATH docker pull dockerhub.kubekey.local/kubesphereio/pod2daemon-flexvol:v3.26.1 --platform amd64"

Error response from daemon: unknown: repository kubesphereio/pod2daemon-flexvol not found: Process exited with status 1

14:59:30 CST failed: [node1]

error: Pipeline[CreateClusterPipeline] execute failed: Module[PullModule] exec failed:

failed: [node1] [PullImages] exec failed after 3 retries: pull image failed: Failed to exec command: sudo -E /bin/bash -c "env PATH=$PATH docker pull dockerhub.kubekey.local/kubesphereio/pod2daemon-flexvol:v3.26.1 --platform amd64"

Error response from daemon: unknown: repository kubesphereio/pod2daemon-flexvol not found: Process exited with status 1

[root@node1 ~]#

报错缺少镜像

docker load -i pod2daemon-flexvo.tar

docker tag 36a93ec1aace dockerhub.kubekey.local/kubesphereio/pod2daemon-flexvol:v3.26.1

报错显示没有cert,证书有我问题的,先删除集群,

./kk delete cluster

#删除/root/kubekey/pki/etcd证书

[root@node1 etcd]# ls

admin-node1-key.pem admin-node1.pem ca-key.pem ca.pem member-node1-key.pem member-node1.pem node-node1-key.pem node-node1.pem

[root@node1 etcd]# pwd

/root/kubekey/pki/etcd

#然后修改/root/kubekey/node1,修改里面的ip地址

etcd.env

10-kubeadm.conf

[root@node1 node1]# ls

10-kubeadm.conf backup-etcd.timer etcd-backup.sh etcd.service harborSerivce k8s-certs-renew.service k8s-certs-renew.timer kubelet.service local-volume.yaml nodelocaldnsConfigmap.yaml

backup-etcd.service coredns-svc.yaml etcd.env harborConfig initOS.sh k8s-certs-renew.sh kubeadm-config.yaml kubesphere.yaml network-plugin.yaml nodelocaldns.yaml

[root@node1 node1]# pwd

/root/kubekey/node1

报错

Please wait for the installation to complete: >>--->

14:33:26 CST failed: [node1]

error: Pipeline[CreateClusterPipeline] execute failed: Module[CheckResultModule] exec failed:

failed: [node1] execute task timeout, Timeout=2h

这个报错可能是存储资源不足,还有镜像拉取有问题,镜像推的repostiory 有问题,重新打包推送

成功执行

namespace/kubesphere-system unchanged

serviceaccount/ks-installer unchanged

customresourcedefinition.apiextensions.k8s.io/clusterconfigurations.installer.kubesphere.io unchanged

clusterrole.rbac.authorization.k8s.io/ks-installer unchanged

clusterrolebinding.rbac.authorization.k8s.io/ks-installer unchanged

deployment.apps/ks-installer unchanged

Warning: resource clusterconfigurations/ks-installer is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

clusterconfiguration.installer.kubesphere.io/ks-installer configured

15:40:14 CST success: [node1]

#####################################################

### Welcome to KubeSphere! ###

#####################################################

Console: http://192.168.31.41:30880

Account: admin

Password: P@88w0rd

NOTES:

1. After you log into the console, please check the

monitoring status of service components in

"Cluster Management". If any service is not

ready, please wait patiently until all components

are up and running.

2. Please change the default password after login.

#####################################################

https://kubesphere.io 2024-06-28 14:48:07

#####################################################

15:40:16 CST success: [node1]

15:40:16 CST Pipeline[CreateClusterPipeline] execute successfully

Installation is complete.

Please check the result using the command:

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f

注意;linux版本为centos7.8

![C语言力扣刷题1——最长回文字串[双指针]](https://img-blog.csdnimg.cn/direct/5d6ffa71658c4aec85a4f2a34031c3ae.png#pic_center)